We know the increasing importance of handling high-dimensional data, particularly NLP, and LLM applications.

I have recently spent considerable amount of time: researching and comparious various vector databases for our Gen AI based projects.

Understanding the Need for Vector Databases:

I think it is crucial to understand the why vector databases are gaining traction ofver RDBMS or NoSQL for specific purposes.

Unlike traditional databases that store structured data in rows, and columns, Vector Databases are excellent at storing and search high dimensional data in the form of vectors.

Example:

[0.81, 0.19, 0.58, 0.99, .........]

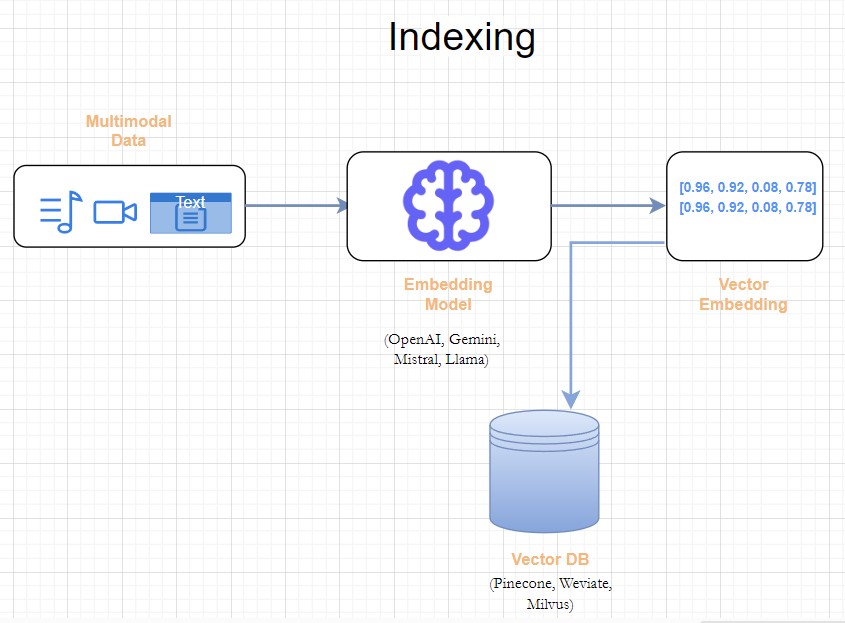

This is how Indexing in Vector DB works.

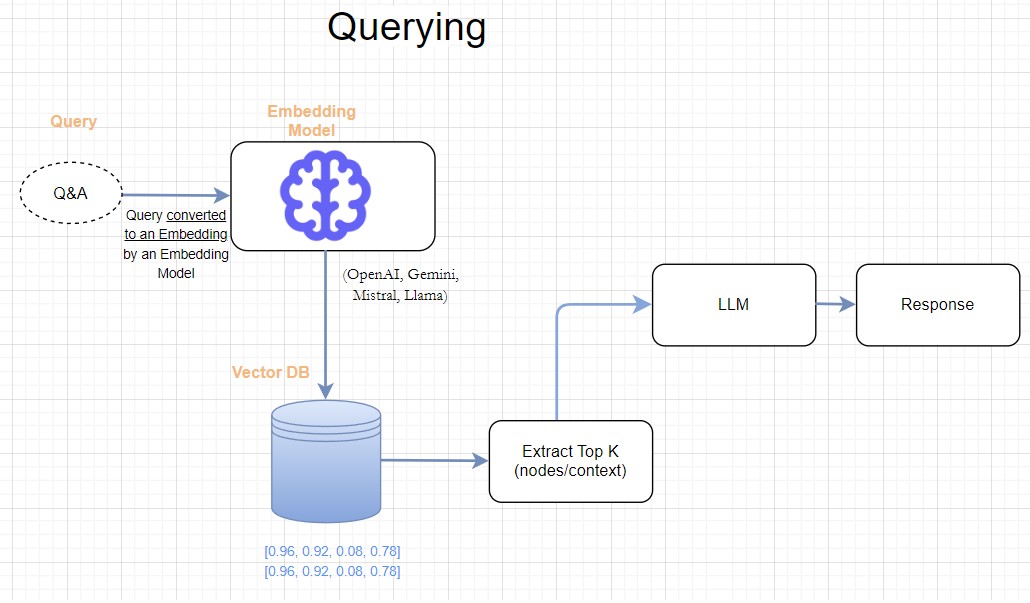

This is how querying on top of Vector DB works.

This vector representation which we often call as embeddings, is required because AI/ML models understand numbers and we need vector form to train the models. This capability is essential for tasks like:

- Similarity Search: Finding items similar to a given item based on their vector representation

- Question Answering: Answering User queries in a conversational manner.

- Semantic Search: Understand the underlying meaning of intent of a search keyword, and deliver accurate results.

- Document Classification: Classify a document into binary or multi class.

to name a few.

Why use Vector DB over RDBMS for Embedding based apps:

- Vector DB are collections with high dimensional vectors where as RDBMS has a table kind of structure with rows & columns

- Vector DBs have Unique Identifiers(ID) with less restrictions which makes it easy to build applications on top of it, where as RDBMS has complex Primary and Foreign keys

- Vector DBs use special indexes(such as inverted, HNSW) which makes is suitable for LLM apps, where as B-tree in the case of RDBMS.

- APIs are available to CRUD operations such as insert, search, update, upsert, delete which is very easy to use compared SQL in RDBMS.

Spent a lot of time to figure out which Vector DB to use and noted down the points below. Depending on your usecase, please choose the right one.

For example, if you want a verticaly scalable, low latency DB, you can choose Pinecone or Qdrant. If you want to do vector search using SQL, choose PGVector.

| Vector DB | When to Use | Scalability | OpenSource/Enterprise | Functionality | Performance | Type | Suitable For |

| FAISS | Efficient for Large datasets | NA | Opensource | GPU availability | ? | Vector Search | Offline evaluations/POCs. Not suitable for Production |

| Milvus | 1. Supports massive vector search 2. Auto Index Management 3. GPU availability |

Horizontal | Opensource | 11 Index, Multi Vector Query, Attribute Filtering, GPU, .. |

Low latency | Purpose built Vector DB | Large Data Volume and Scalable |

| Chroma | Multimodal data | NA | Opensource | ? | Lightweight Vector DB | Not suitable for Production |

|

| ElasticSearch | Mature, Full-text Vector Search | Horizontal | Commercial | High Flexibility, Query Filtering |

High Latency | Vector Search Plugins | Suitable for Production. Flexible queries can help to improve efficiency |

| PgVector | Vector Search using SQL | NA | Opensource Azure pgvector (enterprise) GCP pgvector (enterprise) |

Supports one index only | Low latency | Postgres Extension | Efficient but depends on Postgres. |

| PineCone DB | Scalable, Instant Indexing | Vertical | Enterprise | Low latency | Enterprise cloud-native Vector database |

Production ready and Efficient | |

| Qdrant | Filtering, precise matching | Vertical | Opensource | Additional payloads to filter results |

Low latency | Moderate data volume and Scalable | |

| LanceDB | High Performance, Real time | NA | Opensource | Lightweight Vector DB | Production ready and Efficient | ||

| Weaviate | Knowledge Graph, GraphQL | Horizontal | Opensource | Hybrid Search | Low latency | Lightweight Vector DB | Moderate data volume and Scalable |

Example code to index documents in Pinecone (Enterprise DB)

In the code below, we are using sentence splitter to split the documents into chunks, and applying embedding model(example OpenAI, Gemini etc) to extract the embeddings in the function create_ingestion_pipeline

and we are indexing the embeddings in Pinecone DB in the function store_embeddings_in_pinecone

def create_ingestion_pipeline(vector_store):

sentence_splitter = SentenceSplitter(chunk_size=1024, chunk_overlap=20)

pipeline = IngestionPipeline(

transformations=[

sentence_splitter,

embed_model

],

vector_store=vector_store,

docstore=SimpleDocumentStore(), # For Document Management | Avoiding duplicates in index

)

return pipeline

def store_embeddings_in_pinecone(documents):

"""Extract Embeddings(defined in IngestionPipeline) and store in PineconeDB

Args:

documents ([type]): [description]

"""

pinecone_client = Pinecone(api_key=pinecone_key)

pinecone_index = pinecone_client.Index("demo")

vector_store = PineconeVectorStore(pinecone_index=pinecone_index)

pipeline = create_ingestion_pipeline(vector_store)

nodes = pipeline.run(documents=documents)

return vector_store