If you have used OpenAI and would like to finetune the model on your own data.

Let us setup the environment first

pip3 install openai

pip3 install llama-index

pip3 install pypdf

pip3 install gradio

To train my custom document, in this case, I have downloaded the documment "Getting ROIC right" from EY website, and posted a few questions:

Q1: Why is Return on Invested Capital Important?

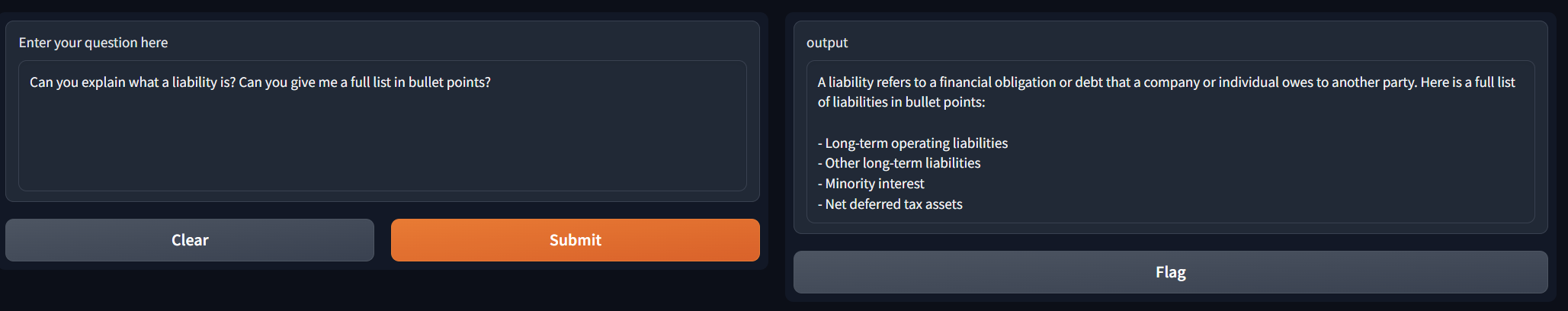

Q2: Can you explain what a liability is? Can you give me a full list in bullet points?

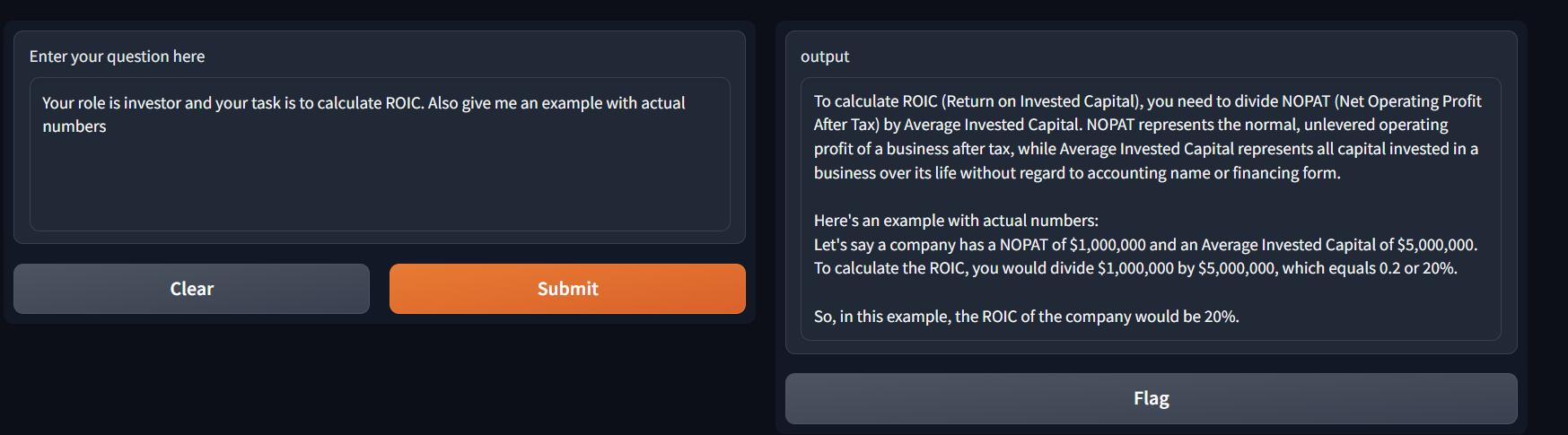

Q3: Your role is investor and your task is to calculate ROIC. Also give me an example with actual numbers.

Now, lets talk about the code.

My folder structure looks like this, and I have copied my pdf in training-data folder

training-data

In the above image, I have my code in custom_chat.py. There are two other folders created by llama-index, the indexes folder that it built using your pdf content and flagged folder created by gradio.

Now the code part:

from llama_index import GPTVectorStoreIndex, SimpleDirectoryReader, LLMPredictor, ServiceContext, StorageContext, load_index_from_storage

from langchain import OpenAI

import gradio

import os

os.environ["OPENAI_API_KEY"] = 'INSERT_KEY_HERE'

def construct_index(directory_path):

# set number of output tokens

num_outputs = 256

_llm_predictor = LLMPredictor(llm=OpenAI(temperature=0.5, model_name="gpt-3.5-turbo", max_tokens=num_outputs))

service_context = ServiceContext.from_defaults(llm_predictor=_llm_predictor)

docs = SimpleDirectoryReader(directory_path).load_data()

index = GPTVectorStoreIndex.from_documents(docs, service_context=service_context)

#Directory in which the indexes will be stored

index.storage_context.persist(persist_dir="indexes")

return index

def chatbot(input_text):

# rebuild storage context

storage_context = StorageContext.from_defaults(persist_dir="indexes")

#load indexes from directory using storage_context

query_engine = load_index_from_storage(storage_context).as_query_engine()

response = query_engine.query(input_text)

#returning the response

return response.response

#Creating the web UIusing gradio

iface = gradio.Interface(fn=chatbot,

inputs=gradio.Textbox(lines=5, label="Enter your question here"),

outputs="text",

title="Custom-trained AI Chatbot")

#Constructing indexes based on the documents in traininData folder

#This can be skipped if you have already trained your app and need to re-run it

index = construct_index("training-data")

#launching the web UI using gradio

iface.launch(share=True)