If you have operationalized a Machine Learning or Deep Learning model in Production or on top of cloud and when you have to generate predictions on demand, you would know how important latency is.

It is a very bad idea to load a model every time whenever there is a prediction request. Moreover, If you have the model on top of cloud, it will increase the cost for data transfer, and increased latency as well.

Lazy Loading is a pattern where, we load the model once, store it in a cache, and load from cache every time a prediction request is generated.

Advantage of Lazy Loading:

- Reduced costs:

- If we have to load the model every time we need to generate a prediction, expecially when the model is hosted on cloud, it is really expensive.

- With lazy loading, we load the model only once which can helps us reduce the cloud bills.

- Improved Latency:

- Since we already have the model ready for inference, it will reduce the time it takes to load the model from an external resource and hence reduces the latency.

- Reliability:

- Sometimes there can be network issues or any other errors which makes the system unreliable. Lazy loading can help us with such issues.

- Resouce Cleanup:

- Since we store the loaded model in a Cache, we can use Cache eviction strategies to cleanup the memory when it is not in use for long time.

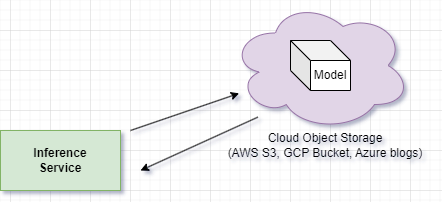

This is how it looks when we dont use lazy loading. We keep sending requests to the Cloud Object storage to retrieve the model repeatedly.

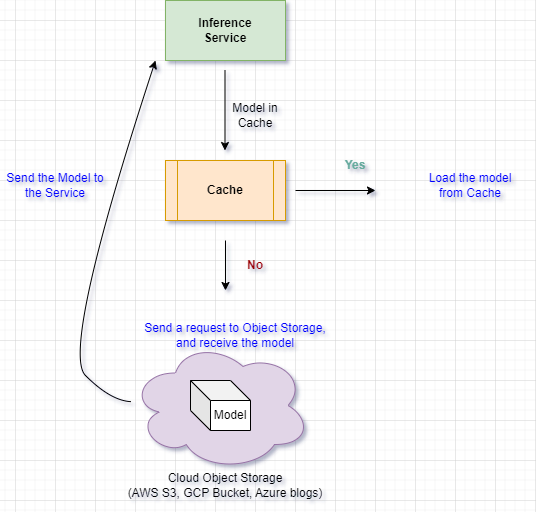

And this is how it looks when we use lazy loading.

As you can see from the above image, we are using Cache in between Cloud object storage and our Prediction Inference service to lazy load the model.