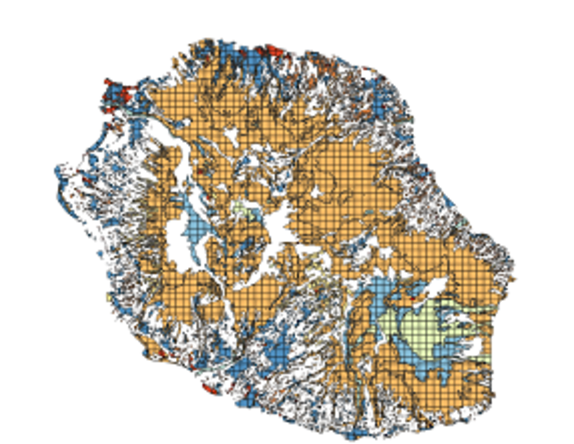

European Conference of Machine Learning - Principles and Practice of Knowledge Discovery in Databases conducted a Machine Learning competition where the task was to classify the land cover.

Unfortunately, I was unable to submit my prediction data points on time. But I got 96.34 % accuracy which would have been 4th position in the competition. Anyways I described my approach below.

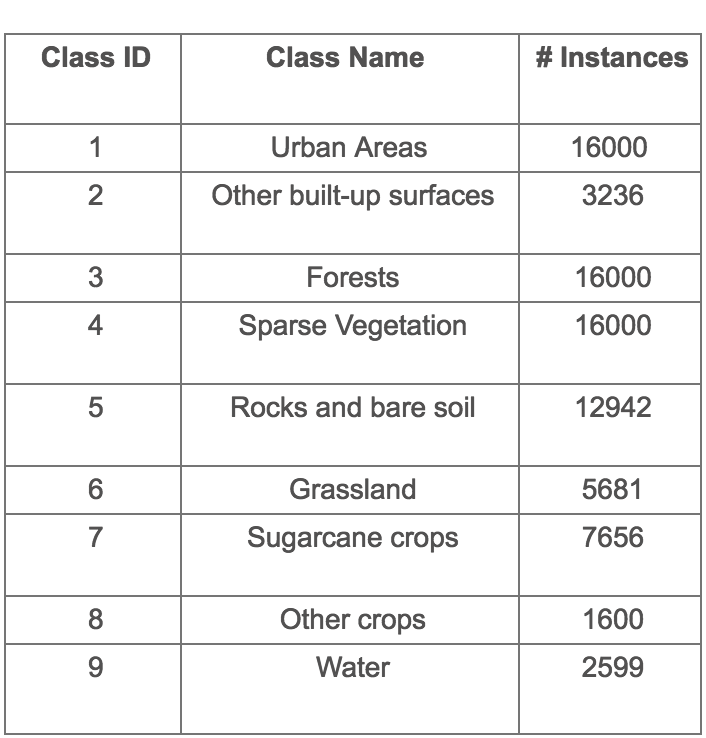

The classification of land cover was divided into the following multi-class(9 classes) distribution.

Below picture depicts the class distribution.

My Approach:

There were 230 columns which contained -ve & +ve data points representing the land cover.

Outliers Removal:

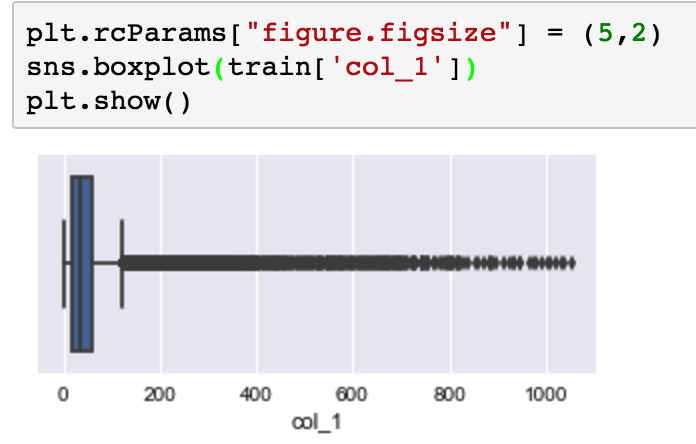

All the columns had few rows with outliers which were removed.

Boxplot depicting outliers in variable col1:

Feature Engineering:

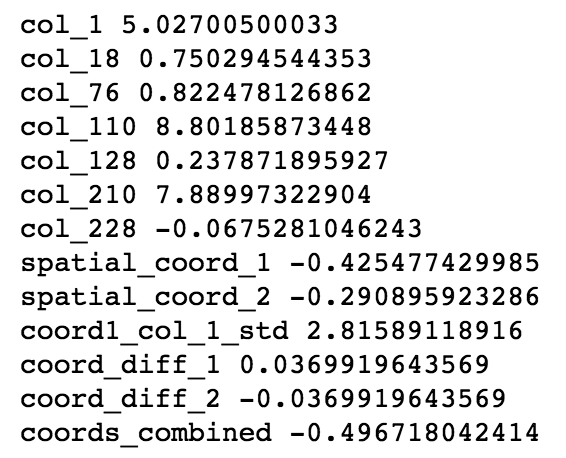

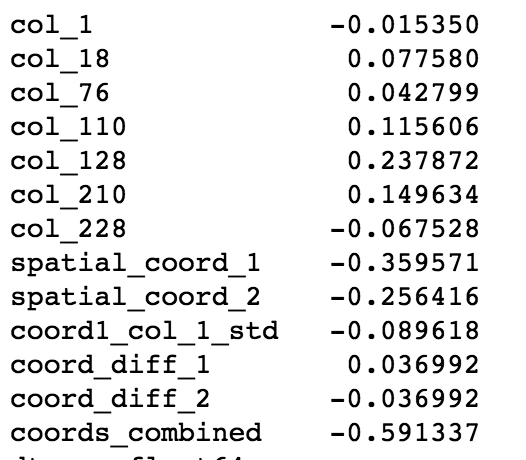

I extracted several features out of which I ended up using the following features after feature selection.

- coord1_col_1_std - Standard deviation of col1 grouped by coord1.

- coord_diff_1 - coord1 minus coord2 variables.

- coord_diff_2 - coord2 minus coord1 variables.

- coords_combined - coord1 + coord2 variables.

Overall, I ended up using 13 features after feature selection.

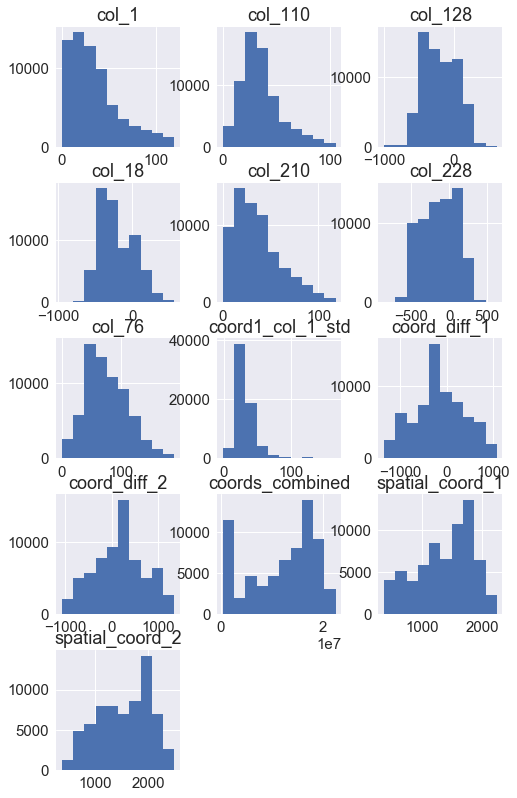

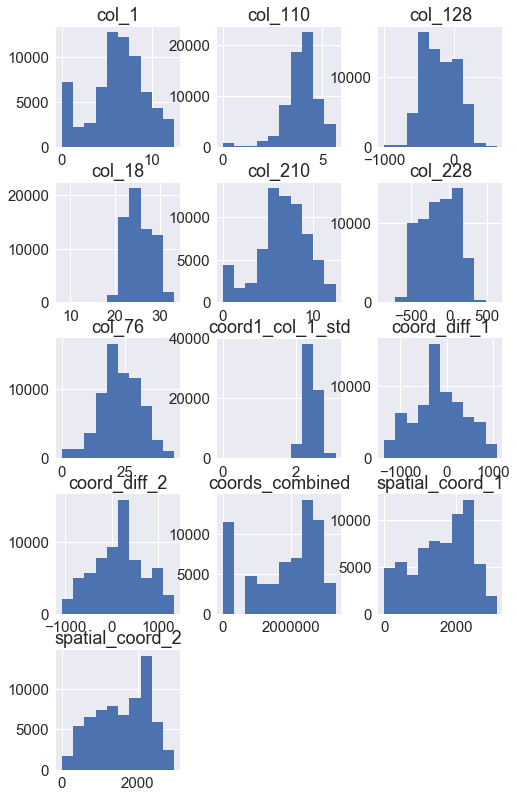

BoxCox Transformation for Skewed Variables :

Most of the variables were highly skewed.

I applied box-cox transformation on variables with (+-)ve 0.25 skew.

Standardize data:

I applied Standard Scaling transformation to standardize the data.

Things that I tried which didn't improve Validation score:

- Polynomial features/ Feature interactions.

- Mean, standard deviations, medians(Measures of central tendency) grouped by coordinates.

- Robust Scaling before removing the outliers.

- Stacking multiple models.

- Max voting based on multiple models.

- Dimensionality reduction.

Model Scores:

I tried several models which resulted in the following local validation scores:

| Model | Validation Score |

|---|---|

| XGboost(Boosting): | 0.96 |

| Linear Regression: | 0.68 |

| Passive Aggressive Classifier: | 0.47 |

| SGD Classifier: | 0.61 |

| Linear Discriminant Analysis: | 0.67 |

| KNeighbors Classifier: | 0.88 |

| Decision Tree Classifier: | 0.89 |

| GaussianNB: | 0.64 |

| BernoulliNB: | 0.57 |

| AdaBoost Classifier: | 0.50 |

| Gradient Boosting Classifier: | 0.89 |

| Random Forest Classifier: | 0.93 |

| Extra Trees Classifier: | 0.95 |

Code available at github