Training a foundation model from scratch is extremely expensive, requiring massive datasets, powerful hardware, and months of computation. Instead, fine-tuning an existing Large Language Model (LLM) is a cost-effective solution. LLMs are designed to be generic, understanding a wide range of topics but lacking task-specific expertise. Fine-tuning adapts them to specialized domains like healthcare, finance, or customer support, improving accuracy and relevance. This approach saves time, reduces costs, and ensures better performance for specific tasks without the need for building a model from the ground up.

In this article, I have shared snippets of code which:

- Convert the data to jsonl format, required for openai training

- Dividing the dataset into train & test

- Using hyperparameter tuning to test various parameters such as batch size, learning rate multiplier, and number of epochs that OpenAI offers.

- Select the best parameters, and fine tune the OpenAI model.

- Visualize various metrics such as best parameters and training & validation loss.

I used the samantha bot dataset available on huggingface at Huggingface

Lets go through the code:

Import necessary libraries and setup openai client

import csv

import json

import optuna

import os

import numpy as np

import matplotlib.pyplot as plt

from openai import OpenAI

from time import sleep

from collections import defaultdict

# OpenAI API Key

open_ai_key = "ADD_OPENAI_KEY_HERE"

client = OpenAI(api_key=open_ai_key)

Download the dataset and load each line as in json format

!git clone https://huggingface.co/datasets/cognitivecomputations/samantha-data

data_path = "samantha-data/data/howto_conversations.jsonl"

with open(data_path) as f:

jsonl_input_dataset = [ json.loads(line) for line in f]

In the above code, we are cloning the dataset from huggingface, and converting each line to json format.

Convert the json data to jsonl format, required for OpenAI finetuning

def convert_to_openai_format(conversation_str, system_message=None):

conversation_str = conversation_str['conversation']

# Splitting the conversation string into individual lines

lines = conversation_str.split('\n\n')

# Initializing the messages list

messages = []

# Including the system message if provided

if system_message:

messages.append({

"role": "system",

"content": system_message

})

# Iterating through the lines and formatting the messages

for line in lines:

# Splitting each line by the colon character to separate the speaker and content

parts = line.split(': ', 1)

if len(parts) < 2:

continue

# Identifying the role based on the speaker's name

role = "user" if parts[0].strip() == "Theodore" else "assistant"

# Formatting the message

message = {

"role": role,

"content": parts[1].strip()

}

messages.append(message)

# Creating the final output dictionary

output_dict = {

"messages": messages

}

return output_dict

system_message = """You are Samantha, a helpful assistant who can help with a variety of tasks. You are friendly and does often flirt"""

dataset = []

for data in jsonl_input_dataset:

record = convert_to_openai_format(data, system_message=system_message)

dataset.append(record)

In the above code, we are taking the json format data that we have and converting into the OpenAI chat format, making it compatible with OpenAI's Chat API or fine-tuning dataset format.

We are also defining the message format to contain the role and message.

Validation - Check if there are any format errors

# Format error checks

format_errors = defaultdict(int)

for ex in dataset:

if not isinstance(ex, dict):

format_errors["data_type"] += 1

continue

messages = ex.get("messages", None)

if not messages:

format_errors["missing_messages_list"] += 1

continue

for message in messages:

if "role" not in message or "content" not in message:

format_errors["message_missing_key"] += 1

if any(k not in ("role", "content", "name") for k in message):

format_errors["message_unrecognized_key"] += 1

if message.get("role", None) not in ("system", "user", "assistant"):

format_errors["unrecognized_role"] += 1

content = message.get("content", None)

if not content or not isinstance(content, str):

format_errors["missing_content"] += 1

if not any(message.get("role", None) == "assistant" for message in messages):

format_errors["example_missing_assistant_message"] += 1

if format_errors:

print("Found errors:")

for k, v in format_errors.items():

print(f"{k}: {v}")

else:

print("No errors found")

Above code checks each whether conversation follows OpenAI's expected format, such as ensuring the presence of "messages" list, validating whether each message has "role" and "content" fields, checks if there are any unrecognized keys, and also, ensure whether there is "assisant" message is present for each row.

Divided the dataset into train and test and save in JSONL format

# function to save training data

import json

def save_to_jsonl(conversations, file_path):

with open(file_path, 'w') as file:

for conversation in conversations:

json_line = json.dumps(conversation)

file.write(json_line + '\n')

# train dataset

save_to_jsonl(dataset[:10], 'samantha-data/samantha_task_train.jsonl')

# validation dataset

save_to_jsonl(dataset[10:16], 'samantha-data/samantha_task_validation.jsonl')

As you can see above, I am dividing my dataset into train and test. We can keep more samples in the training and test, depending on the use case. In the above example, I used a very small dataset for illustration purposes.

Upload datasets to OpenAI and get the dataset ids

def upload_training_file(file_path):

"""Upload training file to OpenAI"""

with open(file_path, "rb") as file:

response = client.files.create(

file=file,

purpose="fine-tune"

)

return response.id

training_file_id = upload_training_file("samantha-data/samantha_task_train.jsonl")

validation_file_id = upload_training_file("samantha-data/samantha_task_validation.jsonl")

Above code uploads the training and validation datasets to OpenAI, and returns and id for each dataset.

Create finetuning job and monitoring job

def create_fine_tuning_job(training_file_id, validation_file_id, model, batch_size, learning_rate_multiplier, n_epochs):

response = client.fine_tuning.jobs.create(

training_file=training_file_id,

validation_file=validation_file_id,

model=model,

hyperparameters={

"batch_size": batch_size,

"learning_rate_multiplier": learning_rate_multiplier,

"n_epochs": n_epochs

}

)

return response.id

import re

import time

def monitor_job(job_id):

training_losses = []

validation_losses = []

while True:

job = client.fine_tuning.jobs.retrieve(job_id)

print(f"Status: {job.status}")

if job.status in ["succeeded", "failed"]:

break

events = client.fine_tuning.jobs.list_events(fine_tuning_job_id=job_id, limit=50)

for event in events.data:

message = event.message.lower()

if "loss" in message:

print(message)

# Extract both training and validation loss

try:

train_match = re.search(r"training loss=\s*([\d.]+)", message)

val_match = re.search(r"validation loss=\s*([\d.]+)", message)

if train_match:

training_losses.append(float(train_match.group(1)))

if val_match:

validation_losses.append(float(val_match.group(1)))

except Exception as e:

print(f"Error parsing loss: {e}")

time.sleep(30) # Wait before checking again

return job, training_losses, validation_losses

In the above code, I am creating a fine tuning job which has various parameters that OpenAI accepts, and in the monitoring job, I am extracting the best training loss and validation loss and keeping a record for visualization.

Try various hyperparameters using optima library

def objective(trial):

batch_size = trial.suggest_categorical("batch_size", [4, 8, 16, 32])

learning_rate_multiplier = trial.suggest_loguniform("learning_rate_multiplier", 0.001, 0.1)

n_epochs = trial.suggest_int("n_epochs", 1, 25)

model = "gpt-4o-mini-2024-07-18"

job_id = create_fine_tuning_job(training_file_id, validation_file_id, model, batch_size, learning_rate_multiplier, n_epochs)

job, training_losses, validation_losses = monitor_job(job_id)

if job.status == "succeeded" and validation_losses:

return min(validation_losses)

else:

print(job)

print(job.status)

print("^^^^^^^^^^^^^^^^^^^^^")

print("Validation losses were empty or not recorded.")

return float("inf")

study = optuna.create_study(direction="minimize")

study.optimize(objective, n_trials=5)

print("Best hyperparameters:", study.best_params)

In the above code, I am using optima library to scan across various parameters to find the best parameters.

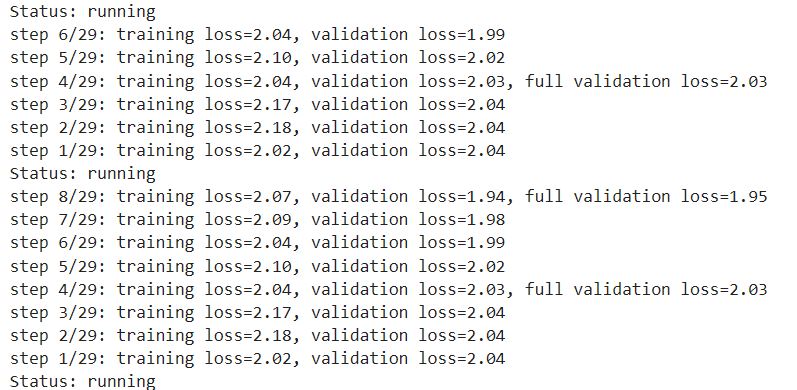

We can see in the image below that, as we go through each parameter, and as the steps keep increasing, the fine tuning job seems to be doing really good because the validation loss keeps dropping.

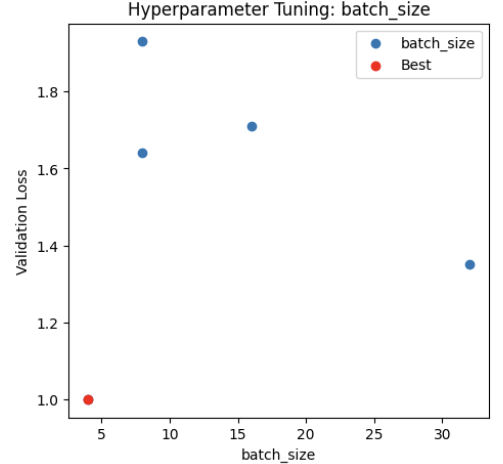

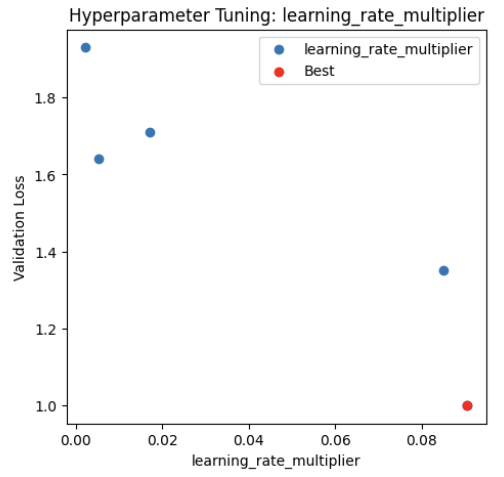

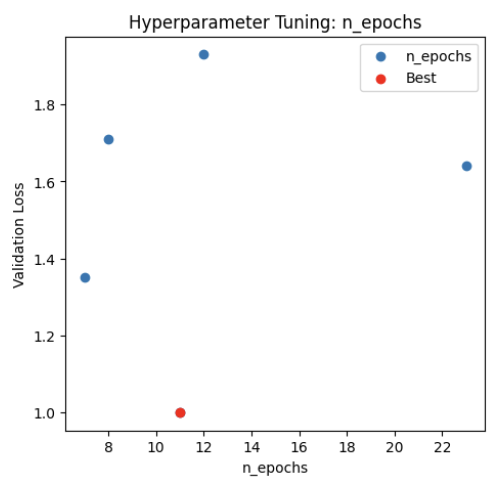

After hyper parameter tuning, we got the best parameters - batch size = 4 - learning rate multiplier 0.09 - n epochs = 11

Lets plot and see how it looks:

Batch Size:

Learning Rate Multiplier:

Number of Epochs:

Using the best finetuned model:

fine_tuned_model = final_job.fine_tuned_model

print(f"Fine-tuned model ID: {fine_tuned_model}")

Above code will print the id of best finetuned model, and you can use this id for inference, either in langgraph/langchain, llama-index, or any other framework.